Get human feedback for AI. Build smarter models, agents & AI product experiences.

Target the right consumers and experts to power AI product research, RLHF, evals, fine-tuning, and data collection.

Target on hundreds of criteria including location (100's of countries globally), demographics (age, gender, income, etc), devices, employment information, custom screening questions, and more.

Run flexible tests, distribute tasks and surveys and collect data and feedback for AI using any tools you want or on our platform. Get high-level AI product feedback, conduct RLHF, evals, fine-tuning, and data collection.

Our platform allows you to easily manage large groups of testers through test processes that span days or weeks.

Why are you building your AI products with bad data?

-

Dirt cheap "research" platforms get you dirt cheap data. Your customers don't make $5 per hour & click MTurk links all day. So the feedback and data you're gathering to improve your AI products isn't helping.

-

We have a quality panel of 450,000+ real consumers and professionals, with 100's of recruiting criteria. Just about every country around the world is represented with > 150,000 people in the USA.

-

Superpower your AI feedback and data collection. Get high level feedback for agents and product experience or directly improve your model through RLHF, evals, fine-tuning, and data collection.

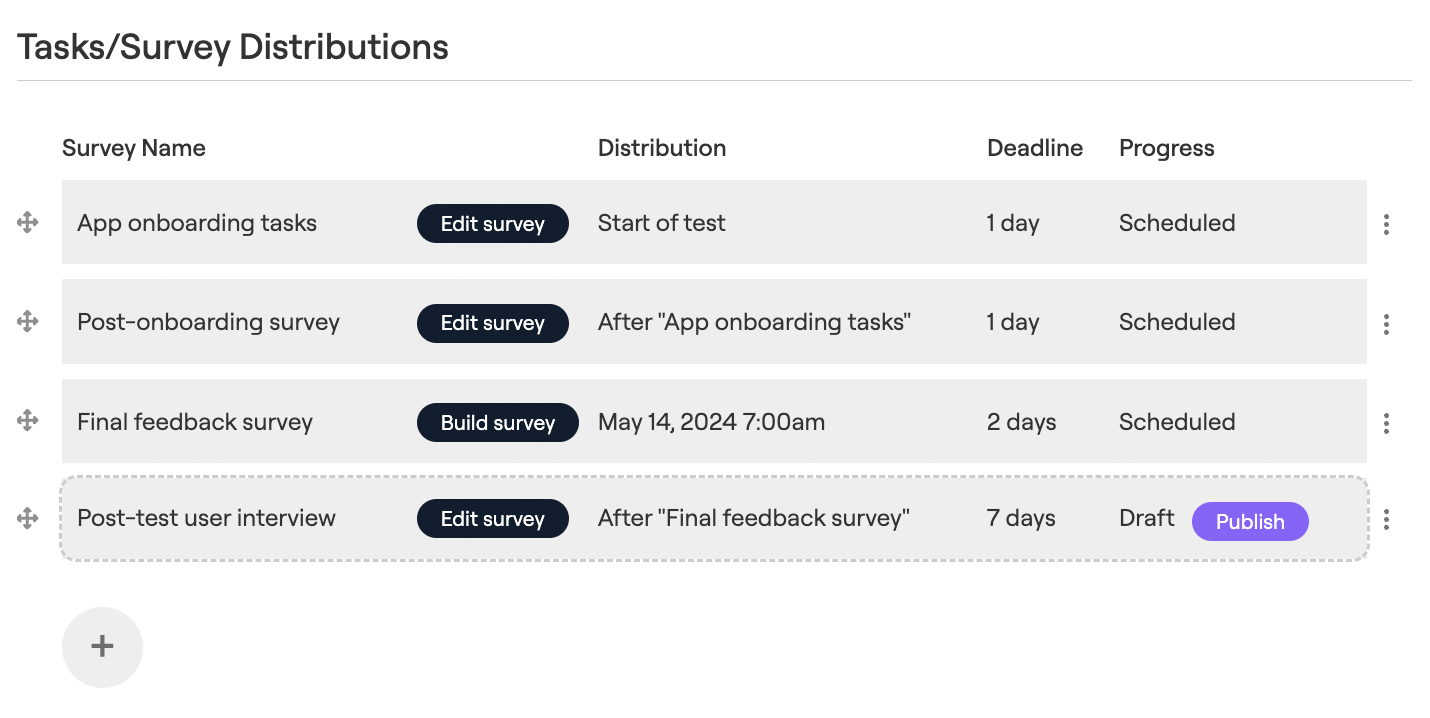

Design custom workflows for any testing, feedback, and data gathering process

Create any test process, even those that span multiple days or weeks with our Test Workflow builder.

Collect data in your app or through our platform

Get the exact data you need in whatever format you need it. Have testers use your product or chosen platform to provide engagement, feedback, or labeling/annotation, or use the BetaTesting platform.

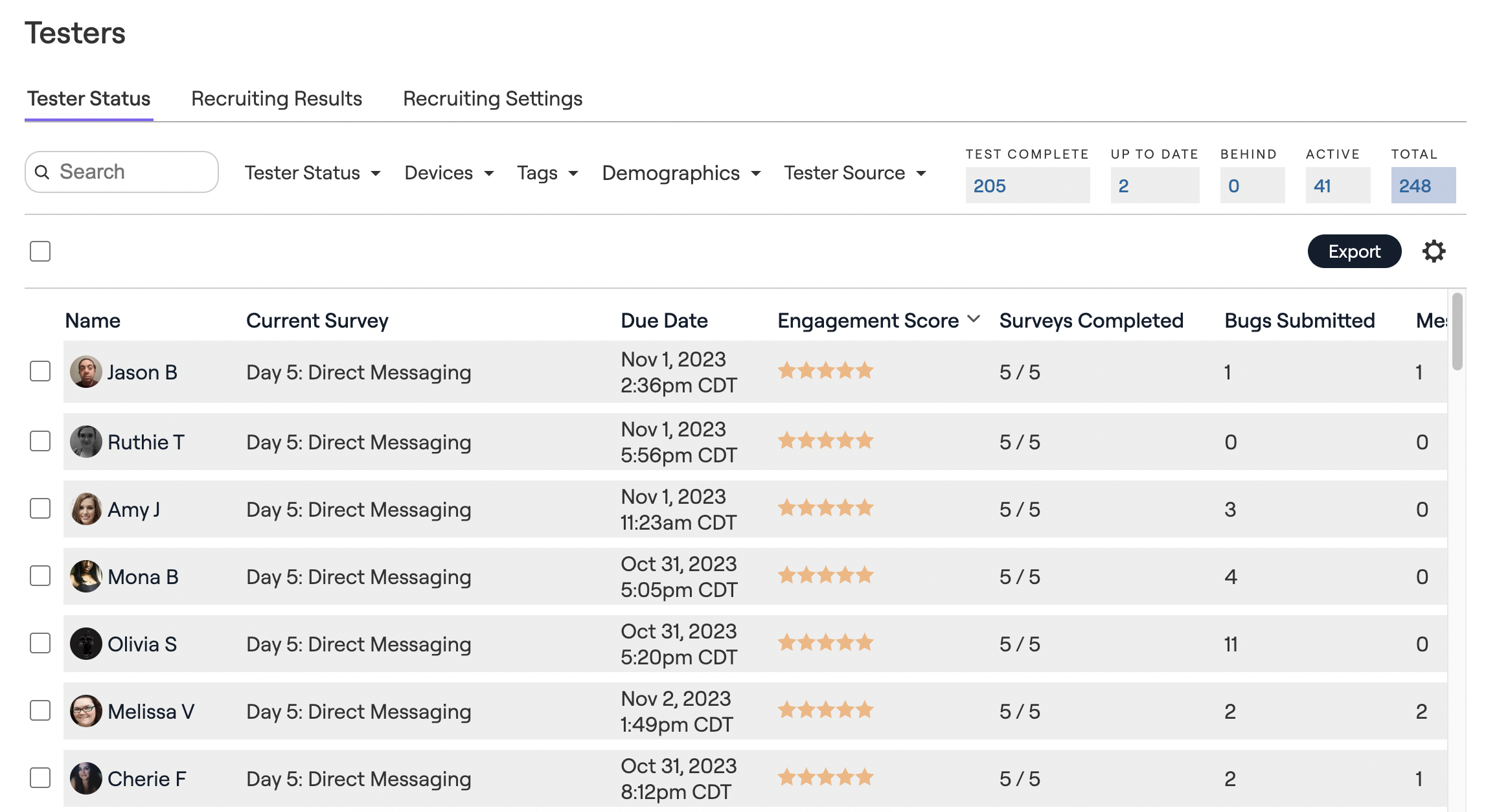

Manage large groups of testers without any headaches

-

Optionally require that all testers agree to NDA terms

-

Re-target past testers, build a tester community, and create cohorts and groups via tags

-

Send messages and communicate with testers one-to-one or send broadcast messages en-masse

Case Studies: Human feedback for AI products

BetaTesting gathered in-car images from hundreds of users to power AI and machine learning for global automotive leader Faurecia

BetaTesting recruited hundreds of targeted users with a wide variety of car models around the world.

Participants provided photos several times per day over a week at specific angles and in various weather conditions.

Data included tagging each image to allow for automated processing with AI and machine learning software

Get in Touch

Iams collected pictures and videos of dog nose prints to improve its app to help rescue dogs

Just as human fingerprints are one-of-a-kind, each dog’s nose print is distinct due to its unique pattern of ridges and grooves.

BetaTesting recruited owners of a wide variety of dog breeds, and the app was tested in various lighting conditions to improve the nose print detection technology

Hundreds of pictures were collected to improve AI models to accurately identify each dog’s nose

Get in Touch

Zocdoc sourced a wide mix of testers to interact with their AI virtual assistant

A mix of testers with different backgrounds and wellness concerns were recruited

Testers went through the website workflow to book appointments

Users interacted with an AI virtual assistant to allow the company to collect data and refine AI models with real audio and conversational data

Get in Touch